Key Takeaways

- Instant Insights: Real-time processing delivers immediate data analysis for faster decision-making.

- Streaming Architectures: Modern frameworks ensure seamless data flow and processing efficiency.

- Performance Boost: Advanced tools enhance system performance and scalability.

- Strategic Planning: Successful implementation requires careful design and integration.

- Analytics Power: Real-time solutions unlock advanced analytics for competitive advantage.

Introduction to Real-Time Data Processing

Real-time data processing is revolutionizing how organizations manage and analyze data. By leveraging modern streaming architectures and advanced processing frameworks, businesses can gain instant insights, improve decision-making, and enhance operational efficiency. This article dives into the core concepts, benefits, implementation strategies, and best practices for real-time data processing solutions, providing a comprehensive guide for organizations aiming to adopt these technologies.

Understanding Real-Time Data Processing

Core Concepts

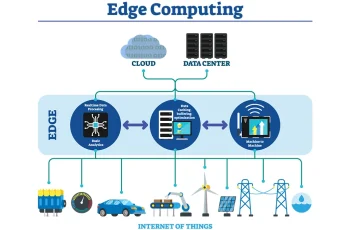

Real-time data processing involves handling data as it arrives, enabling immediate analysis and action. Key components include:

- Data Streaming: Continuous data flow from sources like IoT devices, applications, or user interactions.

- Processing Engines: Tools like Apache Kafka, Apache Flink, or Spark Streaming for real-time computation.

- Analytics Tools: Platforms for visualizing and interpreting data instantly.

- Storage Solutions: Systems like NoSQL databases or cloud storage for efficient data retention.

- Monitoring Systems: Tools to track performance, latency, and system health.

Benefits of Real-Time Processing

Real-time processing offers significant advantages:

- Instant Insights: Immediate data analysis for timely decisions.

- Improved Decision-Making: Actionable insights enhance strategic outcomes.

- Operational Efficiency: Streamlined processes reduce latency and costs.

- Enhanced Analytics: Advanced tools provide deeper data understanding.

- Competitive Advantage: Faster responses to market changes or customer needs.

Implementation Strategies

Processing Framework

A robust framework is critical for real-time data processing. Key elements include:

- Data Ingestion: Collecting data from diverse sources in real time.

- Stream Processing: Processing data on-the-fly using tools like Apache Flink or Kafka Streams.

- Analytics Tools: Real-time dashboards and visualization platforms for insights.

- Storage Systems: Scalable databases like MongoDB or Cassandra for data persistence.

- Monitoring: Tools like Prometheus or Grafana to ensure system reliability.

Technology Integration

Integrating technologies effectively is essential:

- Streaming Platforms: Apache Kafka or AWS Kinesis for reliable data streaming.

- Processing Engines: Apache Spark or Flink for high-speed processing.

- Analytics Tools: Tableau or Power BI for real-time visualization.

- Storage Solutions: Cloud-based options like Amazon S3 or Google BigQuery.

- Security Measures: Encryption and access controls to protect data integrity.

Technical Considerations

Architecture Design

A well-designed architecture ensures success:

- Scalability: Systems must handle growing data volumes seamlessly.

- Performance: Optimized processing minimizes latency.

- Reliability: Fault-tolerant systems ensure continuous operation.

- Security: Robust measures protect sensitive data.

- Cost Efficiency: Balancing performance with infrastructure costs.

Processing Setup

Key setup aspects include:

- Data Ingestion: Efficient pipelines for real-time data collection.

- Stream Processing: Low-latency processing for immediate results.

- Analytics: Tools for real-time insights and reporting.

- Storage: Scalable, high-speed storage solutions.

- Monitoring: Continuous tracking of system performance and health.

Best Practices

System Design

Effective design principles include:

- Scalable Architecture: Build systems that grow with data demands.

- Performance Optimization: Tune frameworks for minimal latency.

- Security Measures: Implement encryption and access controls.

- Monitoring: Use real-time tools to track system health.

- Cost Management: Optimize resource usage to reduce expenses.

Processing Strategy

Strategic elements include:

- Data Quality: Ensure clean, accurate data for reliable insights.

- Processing Efficiency: Streamline workflows for faster results.

- Analytics Capabilities: Leverage advanced tools for deeper insights.

- Security: Protect data with robust protocols.

- Monitoring: Continuously assess system performance.

Use Cases

Processing Applications

Real-time processing powers various applications:

- Real-Time Analytics: Instant insights for business intelligence.

- Fraud Detection: Immediate identification of suspicious activities.

- IoT Data Processing: Real-time analysis of sensor data.

- Customer Analytics: Personalized experiences based on live data.

- Business Intelligence: Dynamic reporting for strategic decisions.

Industry Solutions

Industries leveraging real-time processing include:

- Financial Services: Real-time fraud detection and trading analytics.

- E-commerce: Personalized recommendations and inventory management.

- Healthcare: Real-time patient monitoring and diagnostics.

- Manufacturing: Predictive maintenance and supply chain optimization.

- Technology: Real-time user behavior analysis and system monitoring.

Implementation Challenges

Technical Challenges

Key hurdles include:

- Architecture Complexity: Designing scalable, reliable systems.

- Performance Optimization: Minimizing latency in high-volume environments.

- Data Quality: Ensuring clean, consistent data streams.

- Security Implementation: Protecting data in transit and at rest.

- Cost Management: Balancing performance with infrastructure costs.

Operational Challenges

Operational considerations include:

- Team Skills: Training staff for advanced technologies.

- Process Changes: Adapting workflows for real-time systems.

- Resource Allocation: Ensuring adequate infrastructure and budget.

- Maintenance: Regular updates to maintain system performance.

- Support: Providing ongoing technical support.

Case Studies

Success Story: Financial Services

A leading financial institution implemented real-time processing, achieving:

- 80% Faster Processing: Reduced data analysis time significantly.

- 50% Cost Reduction: Optimized infrastructure for cost efficiency.

- Improved Security: Enhanced data protection measures.

- Better Analytics: Deeper insights into customer behavior.

- Enhanced Insights: Improved decision-making capabilities.

Future Trends

Emerging trends in real-time processing include:

- AI Integration: Machine learning for predictive analytics.

- Advanced Analytics: Sophisticated tools for deeper insights.

- Enhanced Security: Stronger protocols for data protection.

- Better Tools: Next-generation platforms for streamlined processing.

- Improved Practices: Evolving strategies for efficiency and scalability.

FAQ

What are the main benefits of real-time processing?

Real-time processing provides instant insights, better decision-making, improved efficiency, enhanced analytics, and a competitive edge.

How can organizations implement real-time processing?

Focus on scalable architecture, technology integration, and a robust processing strategy to ensure success.

What are the key challenges in implementation?

Challenges include architecture complexity, performance optimization, data quality, security implementation, and cost management.

Conclusion

Real-time data processing is transforming how organizations analyze and act on data. By adopting modern streaming architectures, leveraging advanced frameworks, and following best practices, businesses can unlock instant insights, improve decision-making, and gain a competitive advantage. Addressing technical and operational challenges ensures successful implementation, paving the way for enhanced analytics and operational efficiency.